- by architectureau

- 24 Nov 2024

Artificial intelligence and design: Questions of ethics

As machines behave in increasingly ?human-like? ways, questions of ethics arise in relation to the design and use of AI technologies. Architect and academic Nicole Gardner explains why it?s vital for designers to understand the fundamental principles of AI systems.

- by architectureau

- 06 Mar 2024

- in architects

Artificial intelligence (AI) is a very old idea, but the term AI and the field of AI as it relates to modern programmable digital computing have taken their contemporary forms in the past 70 years.1 Today, we interact with AI technologies constantly, as they power our web search engines, enable social media platforms to feed us targeted advertising, and drive our streaming service recommendations. Nonetheless, the release of OpenAI's open-source AI chatbot ChatGPT in November 2022 signalled a game change because it demonstrated to the wider public a capacity for machines to behave in a "human-like" way. ChatGPT's arrival also reignited questions and debates that have long preoccupied philosophers and ethicists. For example, if machines can behave like humans, what does this mean for our understanding of moral responsibility?

Across numerous professions, ethically charged questions are being asked about what tasks and responsibilities should be delegated to AI, what consequences might arise, and what or who is then responsible. These questions are concerned with how human (and non-human) actions, behaviours and choices affect the responsibilities we have to each other, to the environment and to future generations.

To discuss ethics in relation to AI, some definitions are required. Often, AI is misconstrued as a single thing, and narrowly conflated with machine learning (ML).2 Or, it is expansively associated with all kinds of algorithmic automation processes. When AI takes the form of a tool designed by humans to complete a task, AI is more correctly a system of technologies - including ML algorithms - that work together to simulate human intelligence or human cognitive functions such as seeing, conversing, under-standing and analysing. Further, AI systems are typically designed to act "with a significant degree of autonomy " (emphasis mine) - a characteristic that is particularly important when it comes to ethical assessment.3

Ethics is both an intellectual endeavour and an applied practice that can help us grapple with the choices and dilemmas we face in our daily work and life. It relates to AI in two ways: "AI ethics" focuses on the ethical dilemmas that arise in the design and use of AI technologies, while the "ethics of AI" encompasses the principles, codes, roadmaps, guides and toolkits that have been created to foster the design of ethical AI. While these provisions regarding the ethics of AI are useful, ethical practice is about more than simply following rules. It involves examining and evaluating our choices in relation to their possible consequences, benefits and disbenefits - in short, exercising moral imagination. As technology ethicist Cennydd Bowles puts it, ethics is quite simply a commitment to take our choices and even our lives seriously."4

There are many kinds of AI technologies and systems, and their technical differences as well as contexts of use can bear significantly on the nature and scale of their associated ethical conundrums. For example, the ethical problem of "responsibility attribution" is amplified by generative AI systems that use deep learning5 because their inner workings (what happens between the layers of a neural network) can defy explanation. So, the capacity to truly reckon with the ethical significance of AI technologies relies on our ability to understand fundamental technical principles of AI systems in the context of both their design and use.

Since the 1960s, architects have investigated and debated the potential for AI applications in and for the design process. Today, AI technologies are becoming central to the development of industry-standard software. Autodesk is rapidly expanding its suite of AI tools, including by acquiring AI-powered design software companies such as Spacemaker (now Autodesk Forma). To understand and address the complex ethical dilemmas that arise in relation to the design and use of AI technologies in architecture, we can refer to guides and frameworks,6 but we can also apply our innate design thinking skills. We can ask "ethical questions" - not to land on a "yes" or a "no," but to help us think through scenarios. This process gives us the oppor- tunity to uncover the less obvious or unintended consequences and externalities that the use of AI might bring into play.7

Let's take an example: should designers use AI-powered automated space-planning tools in the design process? In this scenario, the stakeholders - those who stand to be impacted in some way - might include clients, designers, organizations, the profession and the environment. AI-driven space-planning tools could accelerate design processes and allow designers and clients to explore a broader range of layout options. Their use could also enable designers to spend more time on design evaluation, resulting in higher-quality design outputs. And the design firm might be able to reallocate saved time to upskill its employees in new competencies related to emerging technologies and digital literacy. But, the use of AI could also result in a redistribution of design labour and reduced employment. This ethical dilemma is common for most kinds of automation tools.

A dilemma more specific to AI-powered design tools concerns the ML training methods used to generate outputs. This connects to the responsible AI goal of "explainability" - the ability to comprehend how an AI system generates its outputs or makes decisions to identify impacts and potential hidden biases. For example, if an AI tool were trained on residential plans from another country, its spatial outputs might reflect country-specific cultural norms and regulatory requirements. This doesn't mean that we should abandon the AI tool, but it does obligate us to keep numerous humans (designers) in the loop. Because even if, by regulation, a design technology company is required to disclose its training data and/or training methods, both design knowledge and technical expertise will be needed to understand what that means in practice.

Zooming out, we must also recognize that AI ethics extends beyond human entities and includes our obligations to non-humans - that is, the environment and its myriad life forms. As AI researcher Kate Crawford has shown, AI technologies rely on an enormous and extractive ecosystem, "from harvesting the data made from our daily activities and expressions, to depleting natural resources, and to exploiting labour around the globe."8

AI technologies are morally significant because they are entangled in and mediate human decision-making in immeasurable ways. Architects, designers and educators must take responsibility for understanding how AI technologies operate- and what opportunities and pitfalls accompany their use in design practice. By enhancing digital literacy, and normalizing and scaffolding ethical reasoning skills in both the profession and in architecture and design education, we can see AI ethics not as a border guard but as an opportunity to "fertilise new ideas as well as weed out bad ones."9

- by foxnews

- descember 09, 2016

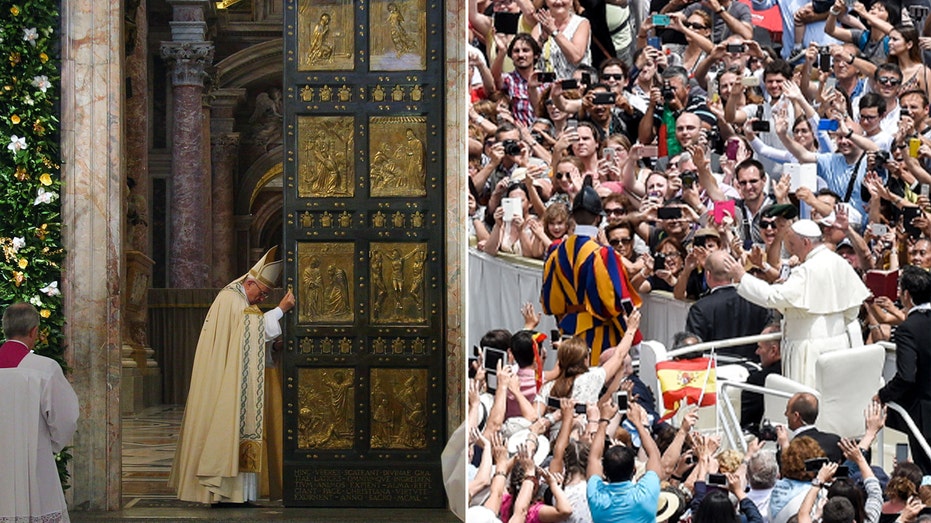

Italy expected to draw travelers by the millions as Pope Francis kicks off Holy Year

The 2025 Jubilee will bring tourists to the Vatican, Rome and Italy to celebrate the Catholic tradition of patrons asking for forgiveness of sins. Hope will be a central theme.

read more