- by foxnews

- 08 Apr 2025

The AI startup erasing call center worker accents: is it fighting bias - or perpetuating it?

The AI startup erasing call center worker accents: is it fighting bias - or perpetuating it?

- by theguardian

- 25 Aug 2022

- in technology

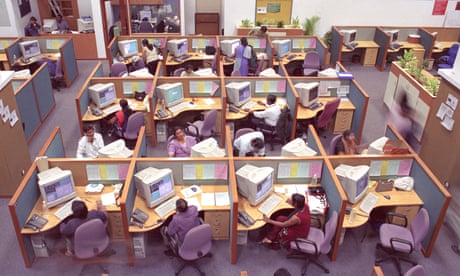

"Hi, good morning. I'm calling in from Bangalore, India." I'm talking on speakerphone to a man with an obvious Indian accent. He pauses. "Now I have enabled the accent translation," he says. It's the same person, but he sounds completely different: loud and slightly nasal, impossible to distinguish from the accents of my friends in Brooklyn.

Only after he had spoken a few more sentences did I notice a hint of the software changing his voice: it rendered the word "technology" with an unnatural cadence and stress on the wrong syllable. Still, it was hard not to be impressed - and disturbed.

The man calling me was a product manager from Sanas, a Silicon Valley startup that's building real-time voice-altering technology that aims to help call center workers around the world sound like westerners. It's an idea that calls to mind the 2018 dark comedy film Sorry to Bother You, in which Cassius, a Black man hired to be a telemarketer, is advised by an older colleague to "use your white voice". The idea is that mimicking the accent will smooth interactions with customers, "like being pulled over by the police", the older worker says. In the film, Cassius quickly acquires a "white voice", and his sales numbers shoot up, leaving an uncomfortable feeling.

Accents are a constant hurdle for millions of call center workers, especially in countries like the Philippines and India, where an entire "accent neutralization" industry tries to train workers to sound more like the western customers they're calling - often unsuccessfully.

As reported in SFGate this week, Sanas hopes its technology can provide a shortcut. Using data about the sounds of different accents and how they correspond to each other, Sanas's AI engine can transform a speaker's accent into what passes for another one - and right now, the focus is on making non-Americans sound like white Americans.

Sharath Keshava Narayana, a Sanas co-founder, told me his motivation for the software dated back to 2003, when he started working at a call center in Bangalore, faced discrimination for his Indian accent and was forced to call himself "Nathan". Narayana left the job after a few months and opened his own call center in Manila in 2015, but the discomfort of that early experience "stayed with me for a long time", he said.

Marty Massih Sarim, Sanas's president and a call center industry veteran, said that call center work should be thought of as a "cosplay", which Sanas is simply trying to improve. "Obviously, it's cheaper to take calls in other countries than it is in America - that's for Fortune 100, Fortune 500, Fortune 1,000 companies. Which is why all the work has been outsourced," he said.

"If that customer is upset about their bill being high or their cable not working or their phone not working or whatever, they're generally going to be frustrated as soon as they hear an accent. They're going to say, I want to talk to somebody in America. The call centers don't route calls back to America, so now the brunt of that is being handled by the agent. They just don't get the respect that they deserve right from the beginning. So it already starts as a really tough conversation. But if we can just eliminate the fact that there's that bias, now it's a conversation - and people both leave the call feeling better."

Narayana said their software is already being used every day by about 1,000 call center workers in the Philippines and India. He said workers could turn it on and off as they pleased, although the call center's manager held the administrative rights for "security purposes only". User feedback has apparently been positive: Narayana claims agents have said they feel more confident on the phone when using the software.

Sanas touts its own technology as "a step towards empowering individuals, advancing equality, and deepening empathy". The company raised $32m in venture capital in June: one funder, Bob Lonergan, gushed that the software "has the potential to disrupt and revolutionize communication". But it also raises uncomfortable questions: is AI technology helping marginalized people overcome bias, or just perpetuating the biases that make their lives hard in the first place?

A Aneesh, a sociologist and the incoming director of the University of Oregon's School of Global Studies and Languages, has spent years studying call centers and accent neutralization. In 2007, as part of his research, the scholar - who has a mix of an Indian and American accent - got himself hired as a telemarketer in India, an experience he detailed in his 2015 book Neutral Accent: How Language, Labor and Life Become Global.

At the call center, he witnessed how his colleagues were put through a taxing process to change their accents. "The goal is to be comprehensible to the other side," he said. "The neutralization training that they were doing was just reducing slightly the thickness of regional accents within India to allow this thing to happen." Workers had to relearn pronunciations of words such as "laboratory", which Indians pronounce with the British stress on the second syllable. They also had to eliminate parts of Indian English - like the frequent use of the word "sir". They had to learn uniquely American words, including a list of over 30 street designations such as "boulevard", and memorize all 50 US states and capitals. "They have to mimic the culture as well as neutralize their own culture," Aneesh said. "Training takes a lot out of you."

In addition to the low base salary, Aneesh said one of the most difficult parts of the job was being forced to sleep all day and work all night to adapt to times in the United States - something biologists have found can have serious health risks, including cancer and pre-term births. It also isolated workers from the rest of society.

These are all inequalities that call center employers hope to conceal. Even the way callers are connected to each other is completely computerized and designed to maximize profit.

The sociologist has mixed feelings about Sanas. "In a narrow sense, it's a good thing for the trainee: they don't have to be trained as much. It's not very easy for an immigrant or for a foreigner sitting somewhere else in the world to be not understood because of their accent. And they sometimes get abused.

"But in the long view, as a sociologist, it's a problem."

The danger, Aneesh said, was that artificially neutralizing accents represented a kind of "indifference to difference", which diminishes the humanity of the person on the other end of the phone. "It allows us to avoid social reality, which is that you are two human beings on the same planet, that you have obligations to each other. It's pointing to a lonelier future."

Chris Gilliard, a researcher who studies privacy, surveillance and the negative impacts of technology on marginalized communities, said call center workers "exist to absorb the ire of angry customers. It looks a lot like other things like content moderation, where companies offload the worst, most difficult, most soul-sucking jobs to people in other countries to deal with," he said. Transforming the workers' accents wouldn't change that, but only "caters to people's racist beliefs".

"Like so many of the things that are pitched as the solution, it doesn't take into account people's dignity or humanity," he said. "One of the long-range effects is the erasure of people as individuals. It seems like an attempt to boil everybody down to some homogenized, mechanical voice that ignores all the beauty that comes from people's languages and dialects and cultures. It's a really sad thing."

Narayana said he had heard the criticism, but he argued that Sanas approaches the world as it is. "Yes, this is wrong, and we should not have existed at all. But a lot of things exist in the world - like why does makeup exist? Why can't people accept the way they are? Is it wrong, the way the world is? Absolutely. But do we then let agents suffer? I built this technology for the agents, because I don't want him or her to go through what I went through."

The comparison to makeup is unsettling. If society - or say, an employer - pressures certain people to wear makeup, is it a real choice? And though Sanas frames its technology as opt-in, it's not hard to envision a future in which this kind of algorithmic "makeup" becomes more widely available - and even mandatory. And many of the problems Narayana outlines from his own experience of working at a call centre - poor treatment from employers, the degrading feeling of having to use a fake name - will not be changed by the technology.

After our interview, I emailed a sound demo of Sanas's technology to Aneesh to get his reaction. "Hearing it closely, I realized that there was a hint of emotion, politeness and sociality in the original caller's voice," he replied. That was gone in the digitally transformed version, "which sounds a bit robotic, flat and - ahem - neutral".

- by foxnews

- descember 09, 2016

Ancient settlement reveals remains of 1,800-year-old dog, baffling experts: 'Preserved quite well'

Archaeologists have recently unearthed the remarkably well-preserved remains of a dog from ancient Rome, shedding light on the widespread practice of ritual sacrifice in antiquity.

read more