- by foxnews

- 24 Nov 2024

TechScape: The people charged with making sure AI doesn

TechScape: The people charged with making sure AI doesn?t destroy humanity have left the building

- by theguardian

- 22 May 2024

- in technology

Everything happens so much. I'm in Seoul for the International AI summit, the half-year follow-up to last year's Bletchley Park AI safety summit (the full sequel will be in Paris this autumn). While you read this, the first day of events will have just wrapped up - though, in keeping with the reduced fuss this time round, that was merely a "virtual" leaders' meeting.

When the date was set for this summit - alarmingly late in the day for, say, a journalist with two preschool children for whom four days away from home is a juggling act - it was clear that there would be a lot to cover. The hot AI summer is upon us:

Then, the weekend before the summit kicked off, everything kicked off at OpenAI as well. Most eye-catchingly, perhaps, the company found itself in a row with Scarlett Johansson over one of the voice options available in the new iteration of ChatGPT. Having approached the actor to lend her voice to its new assistant, an offer she declined twice, OpenAI launched ChatGPT-4o with "Sky" talking through its new capabilities. The similarity to Johansson was immediately obvious to all, even before CEO Sam Altman tweeted "her" after the presentation (the name of the Spike Jonze film in which Johansson voiced a super-intelligent AI). Despite denying the similarity, the Sky voice option has been removed.

More importantly though, the two men leading the company/nonprofit/secret villainous organisation's "superalignment" team - which was devoted to ensuring that its efforts to build a superintelligence don't end humanity - quit. First to go was Ilya Sutskever, the co-founder of the organisation and leader of the boardroom coup which, temporarily and ineffectually, ousted Altman. His exit raised eyebrows, but it was hardly unforeseen. You come at the king, you best not miss. Then, on Friday, Jan Leike, Sutskever's co-lead of superalignment also left, and had a lot more to say:

Leike's resignation note was a rare insight into dissent at the group, which has previously been portrayed as almost single-minded in its pursuit of its - which sometimes means Sam Altman's - goals. When the charismatic chief executive was fired, it was reported that almost all staff had accepted offers from Microsoft to follow him to a new AI lab set up under the House of Gates, which also has the largest external stake in OpenAI's corporate subsidiary. Even when a number of staff quit to form Anthropic, a rival AI company that distinguishes itself by talking up how much it focuses on safety, the amount of shit-talking was kept to a minimum.

It turns out (surprise!) that's not because everyone loves each other and has nothing bad to say. From Kelsey Piper at Vox:

Barely a day later, Altman said the clawback provisions "should never have been something we had in any documents". He added: "we have never clawed back anyone's vested equity, nor will we do that if people do not sign a separation agreement. this is on me and one of the few times I've been genuinely embarrassed running openai; i did not know this was happening and i should have." (Capitalisation model's own.)

Altman didn't address the wider allegations, of a strict and broad NDA; and, while he promised to fix the clawback provision, nothing was said about the other incentives, carrot and stick, offered to employees to sign the exit paperwork.

As set-dressing goes, it's perfect. Altman has been a significant proponent of state and interstate regulation of AI. Now we see why it might be necessary. If OpenAI, one of the biggest and best-resourced AI labs in the world, which claims that safety is at the root of everything it does, can't even keep its own team together, then what hope is there for the rest of the industry?

It's fun to watch a term of art developing in front of your eyes. Post had junk mail; email had spam; the AI world has slop:

I'm keen to help popularise the term, for much the same reasons as Simon Willison, the developer who brought its emergence to my attention: it's crucial to have easy ways to talk about AI done badly, to preserve the ability to acknowledge that AI can be done well.

The existence of spam implies emails that you want to receive; the existence of slop entails AI content that is desired. For me, that's content I've generated myself, or at least that I'm expecting to be AI-generated. No one cares about the dream you had last night, and no one cares about the response you got from ChatGPT. Keep it to yourself.

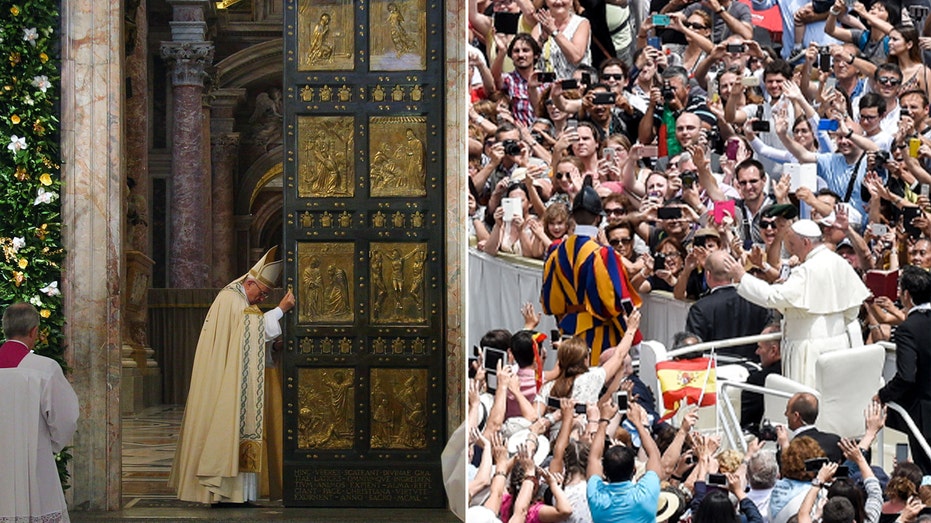

- by foxnews

- descember 09, 2016

Italy expected to draw travelers by the millions as Pope Francis kicks off Holy Year

The 2025 Jubilee will bring tourists to the Vatican, Rome and Italy to celebrate the Catholic tradition of patrons asking for forgiveness of sins. Hope will be a central theme.

read more