- by foxnews

- 23 May 2025

FBI's new warning about AI-driven scams that are after your cash

FBI warns that criminals are using generative AI to exploit individuals with deceptive tactics. Kurt "CyberGuy" Knutsson explains their tactics and how to protect yourself from them.

- by foxnews

- 08 Jan 2025

- in technology

Deepfakes refer to AI-generated content that can convincingly mimic real people, including their voices, images and videos. Criminals are using these techniques to impersonate individuals, often in crisis situations. For instance, they might generate audio clips that sound like a loved one asking for urgent financial assistance or even create real-time video calls that appear to involve company executives or law enforcement officials. The FBI has identified 17 common techniques used by criminals to create these deceptive materials.

The FBI has identified 17 common techniques that criminals are using to exploit generative AI technologies, particularly deepfakes, for fraudulent activities. Here is a comprehensive list of these techniques.

1) Voice cloning: Generating audio clips that mimic the voice of a family member or other trusted individuals to manipulate victims.

2) Real-time video calls: Creating fake video interactions that appear to involve authority figures, such as law enforcement or corporate executives.

3) Social engineering: Utilizing emotional appeals to manipulate victims into revealing personal information or transferring funds.

5) AI-generated images: Using synthetic images to create believable profiles on social media or fraudulent websites.

6) AI-generated videos: Producing convincing videos that can be used in scams, including investment frauds or impersonation schemes.

7) Creating fake social media profiles: Establishing fraudulent accounts that use AI-generated content to deceive others.

8) Phishing emails: Sending emails that appear legitimate but are crafted using AI to trick recipients into providing sensitive information.

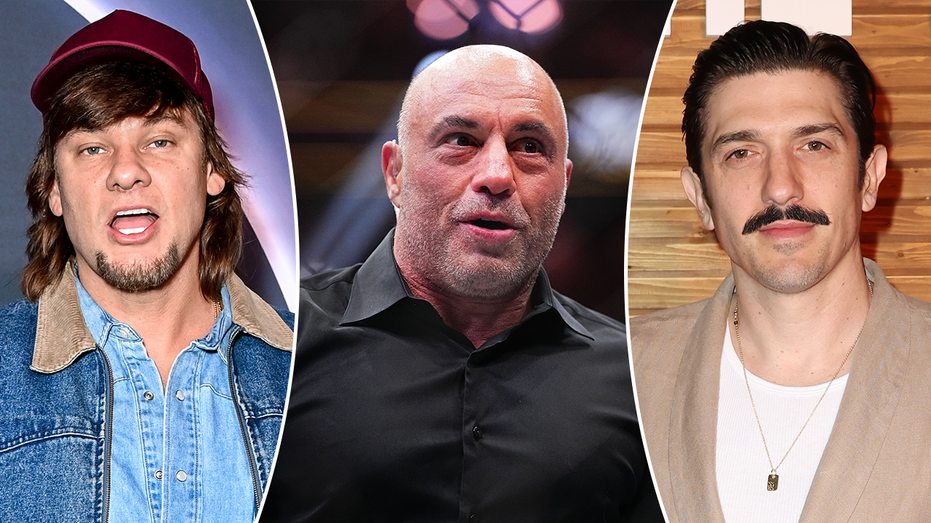

9) Impersonation of public figures: Using deepfake technology to create videos or audio clips that mimic well-known personalities for scams.

10) Fake identification documents: Generating fraudulent IDs, such as driver's licenses or credentials, for identity fraud and impersonation.

11) Investment fraud schemes: Deploying AI-generated materials to convince victims to invest in non-existent opportunities.

12) Ransom demands: Impersonating loved ones in distress to solicit ransom payments from victims.

13) Manipulating voice recognition systems: Using cloned voices to bypass security measures that rely on voice authentication.

14) Fake charity appeals: Creating deepfake content that solicits donations under false pretenses, often during crises.

15) Business email compromise: Crafting emails that appear to come from executives or trusted contacts to authorize fraudulent transactions.

16) Creating misinformation campaigns: Utilizing deepfake videos as part of broader disinformation efforts, particularly around significant events like elections.

17) Exploiting crisis situations: Generating urgent requests for help or money during emergencies, leveraging emotional manipulation.

These tactics highlight the increasing sophistication of fraud schemes facilitated by generative AI and the importance of vigilance in protecting personal information.

Implementing the following strategies can enhance your security and awareness against deepfake-related fraud.

1) Limit your online presence: Reduce the amount of personal information, especially high-quality images and videos, available on social media by adjusting privacy settings.

3) Avoid sharing sensitive information: Never disclose personal details or financial information to strangers online or over the phone.

4) Stay vigilant with new connections: Be cautious when accepting new friends or connections on social media; verify their authenticity before engaging.

5) Check privacy settings on social media: Ensure that your profiles are set to private and that you only accept friend requests from trusted individuals. Here's how to switch any social media accounts, including Facebook, Instagram, Twitter and any others you may use, to private.

7) Verify callers: If you receive a suspicious call, hang up and independently verify the caller's identity by contacting their organization through official channels.

8) Watermark your media: When sharing photos or videos online, consider using digital watermarks to deter unauthorized use.

9) Monitor your accounts regularly: Keep an eye on your financial and online accounts for any unusual activity that could indicate fraud.

12) Create a secret verification phrase: Establish a unique word or phrase with family and friends to verify identities during unexpected communications.

13) Be aware of visual imperfections: Look for subtle flaws in images or videos, such as distorted features or unnatural movements, which may indicate manipulation.

14) Listen for anomalies in voice: Pay attention to the tone, pitch and choice of words in audio clips. AI-generated voices may sound unnatural or robotic.

15) Don't click on links or download attachments from suspicious sources: Be cautious when receiving emails, direct messages, texts, phone calls or other digital communications if the source is unknown. This is especially true if the message is demanding that you act fast, such as claiming your computer has been hacked or that you have won a prize. Deepfake creators attempt to manipulate your emotions, so you download malware or share personal information. Always think before you click.

By following these tips, individuals can better protect themselves from the risks associated with deepfake technology and related scams.

The increasing use of generative AI technologies, particularly deepfakes, by criminals highlights a pressing need for awareness and caution. As the FBI warns, these sophisticated tools enable fraudsters to impersonate individuals convincingly, making scams harder to detect and more believable than ever. It's crucial for everyone to understand the tactics employed by these criminals and to take proactive steps to protect their personal information. By staying informed about the risks and implementing security measures, such as verifying identities and limiting online exposure, we can better safeguard ourselves against these emerging threats.

Follow Kurt on his social channels:

Answers to the most asked CyberGuy questions:

New from Kurt:

Copyright 2024 CyberGuy.com. All rights reserved.

- by foxnews

- descember 09, 2016

United Airlines flight returns to Hawaii after concerning message found on bathroom mirror; FBI investigating

United Airlines Flight 1169 to Los Angeles returned to Hawaii after a "potential security concern" aboard the plane. The FBI and police are investigating.

read more