- by econsultancy

- 27 Feb 2023

‘AI-powered’ search is off to a problematic start. Can Google and Bing fix it?

‘AI-powered’ search is off to a problematic start. Can Google and Bing fix it?

- by econsultancy

- 24 Feb 2023

- in marketing

The era of AI-generated conversational search is, apparently, here.

On 16th December I published a piece about whether ChatGPT could pose a threat to Google, as many were already suggesting that it might, just two and a half weeks on from the chatbot's release. At the time of writing, neither Google nor Microsoft - a major backer of ChatGPT's parent organisation, OpenAI - had indicated any plans to actually integrate technology like ChatGPT into their search engines, and the idea seemed like a far-off possibility.

While ChatGPT is an impressive conversational chatbot, it has some significant drawbacks, particularly as an arbiter of facts and information: large language models (LLMs) like ChatGPT have a tendency to "hallucinate" (the technical term) and confidently state wrong information, a task that ChatGPT's makers have called "challenging" to fix. But the idea of a chat-based search interface has its appeal. In my previous article, I speculated that there could be a lot of benefits to a conversational search assistant - like the ability to ask multi-part and follow-up questions, and receive a definitive single answer - if ChatGPT's problems could be addressed.

Google at first appeared to be taking a cautious route to responding to the 'threat' of ChatGPT, telling staff internally that it needed to move "more conservatively than a small startup" due to the "reputational risk" involved. Yet over the past several days, Microsoft and Google have engaged in an escalating war of AI-based search announcements, with Google announcing Bard, a conversational search agent powered by Google's LLM, LaMDA, on 6th February, followed immediately by Microsoft holding a press conference to show off its own conversational search capabilities using an advanced version of ChatGPT.

Google's initial hesitation over moving too quickly with AI-based search was perhaps shown to be justified when, hours before an event in which the company was due to demo Bard, Reuters spotted an error in one of the sample answers that Google had touted in its press release. Publications quickly filled up with headlines about the mistake, and Google responded by emphasising that this showed the need for "rigorous testing" of the AI technology, which it has already begun.

But is the damage already done? Have Google and Microsoft over-committed themselves to a problematic technology - or is there a way it can still be useful?

Experts have been pointing out the problems with LLMs like ChatGPT and LaMDA for some time. As I mentioned earlier, ChatGPT's own makers admit that the chatbot "sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging."

When ChatGPT was first released, users found that it would bizarrely insist on some demonstrably incorrect 'facts': the most infamous example involved ChatGPT insisting that the peregrine falcon was a marine mammal, but it has also asserted that the Royal Marines' uniforms during the Napoleonic Wars were blue (they were red), and that the largest country in Central America that isn't Mexico is Guatemala (it is in fact Honduras). It has also been found to struggle with maths problems, particularly when those problems are written out in words instead of numbers - and this persisted even after OpenAI released an upgraded version of ChatGPT with "improved accuracy and mathematical capabilities".

Emily M. Bender, Professor of Linguistics at the University of Washington, spoke to the newsletter Semafor Technology in January about why the presentation of LLMs as 'knowledgeable AI' is misleading. "I worry that [large language models like ChatGPT] are oversold in ways that encourage people to take what they say as if it were the result of understanding both query and input text and reasoning over it," she said.

"In fact, what they are designed to do is create plausible sounding text, without any grounding in communicative intent or accountability for truth-all while reproducing the biases of their training data." Bender also co-authored a paper on the issues with LLMs called 'On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?' alongside Timnit Gebru, the then-head of AI ethics at Google, and a number of other Google staff.

Google has requisitioned a group of "trusted" external testers to feed back on the early version of Bard, whose feedback will be combined with internal testing "to make sure Bard's responses meet a high bar for quality, safety and groundedness in real-world information". The search bar in Google's demonstrations of Bard is also accompanied by a warning: "Bard may give inaccurate or inappropriate information. Your feedback makes Bard more helpful and safe."

Google has borne the brunt of the critical headlines due to Bard's James Webb Space Telescope (JWST) gaffe, in which Bard erroneously stated that "JWST took the very first pictures of a planet outside of our own solar system". However, Microsoft's integration of ChatGPT into Bing - which is currently available on an invite-only basis - also has its issues.

When The Verge asked Bing's chatbot on the day of the Microsoft press event what its parent company had announced that day, it correctly stated that Microsoft had launched a new Bing search powered by OpenAI, but also added that Microsoft had demonstrated its capability for "celebrity parodies", something that was definitely not featured in the event. It also erroneously stated that Microsoft's multibillion-dollar investment into OpenAI had been announced that day, when it was actually announced two weeks prior.

Microsoft's new Bing FAQ contains a warning not dissimilar to Google's Bard disclaimer: "Bing tries to keep answers fun and factual, but given this is an early preview, it can still show unexpected or inaccurate results based on the web content summarized, so please use your best judgment."

Another question about whether Bing's AI-generated responses are "always factual" states: "Bing aims to base all its responses on reliable sources - but AI can make mistakes, and third party content on the internet may not always be accurate or reliable. Bing will sometimes misrepresent the information it finds, and you may see responses that sound convincing but are incomplete, inaccurate, or inappropriate. Use your own judgment and double check the facts before making decisions or taking action based on Bing's responses."

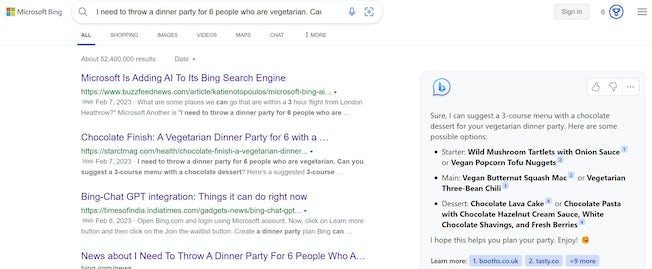

Many people would point out the irony of a search engine asking users to "double check the facts", as search engines are typically the first place that people go to fact-check things. However, to Bing's credit, web search results are either placed next to chat responses, or can easily be toggled to in the next tab. The responses from Bing's chatbot also include citations that show where the detail originated from, together with a clickable footnote that links to the source; in the chat tab, certain phrases are underlined and can be hovered over to see the search result that produced the information.

Microsoft's new chat-based Bing will position chat responses in a sidebar alongside web search results, or else house them in a separate tab, while also footnoting the sources of its responses. (Image source: screenshot of a pre-selected demo question)

This is something that Bard noticeably lacks at present, which has caused serious consternation among publishers - both because of the implications for their search traffic, and because it means that Bard may be drawing information from their sites without attributing it. One SEO queried John Mueller about the lack of sources in Bard, as well as asking what proportion of searches Bard will be available for - but Mueller's response was that it's "too early to say", although he added that "your feedback can help to shape the next steps".

Ironically, including the source of Bard's information could have mitigated the controversy around its James Webb Space Telescope mistake, as Marco Fonseca pointed out on Twitter that Bard wasn't as far off the mark as it seemed: NASA's website does say that "For the first time, astronomers have used NASA's James Webb Space Telescope to take a direct image of a planet outside our solar system" - but the key word here is "direct". The first image taken of an exoplanet in general was in 2004. This makes Bard's mistake more like an error in summarising than an outright falsehood (though it was still an error).

In the Bard demonstrations that we've so far been shown, there is occasionally a small button that appears below a search response labelled 'Check it'. It's not clear exactly what this produces or whether it's only intended for testers to assess and evaluate Bard's responses, but a feature that shows how Bard assembled the information for its response would be important for transparency and fact-checking, while links back to the source would help to restore the relationship of trust with publishers.

However, it's also true that one of the main advantages of receiving a single answer in search is to avoid having to research multiple sources to come to a conclusion. In addition to this, many users would not check the originating source(s) to understand where the answer came from. Is there a way to apply generative AI in search that avoids this issue?

During the period before Microsoft formally demonstrated its new Bing AI, but after the news had leaked that it was planning to launch one, there were various speculations about how Microsoft would integrate ChatGPT's technology into Bing. One very interesting take came from Mary Jo Foley, Editor-in-Chief of the independent website Directions on Microsoft, who hypothesised that Microsoft could use ChatGPT to realise an old project, the Bing Concierge Bot.

The Bing Concierge Bot project that has been around since 2016, when it was described as a "productivity agent" that would be able to run on a variety of messaging services (Skype, Telegram, WhatsApp, SMS) and help the user to accomplish tasks. The project reappeared again in 2021 in a guise that sounds fairly similar to ChatGPT's current form, albeit with a much less sophisticated chat functionality; the bot could return search recommendations for products such as laptops and also provide assistance with things like Windows upgrades.

Foley suggested that Bing could use ChatGPT not as a web search agent, but more of a general assistant across various different Microsoft workplace services and tools. There is a sense that this is the direction that Microsoft is driving in: in addition to an AI agent to accompany web search, Microsoft has integrated ChatGPT functionality into its Edge browser, which can summarise the contents of a document for you and also compose text, for example for a LinkedIn post. In its FAQ, Microsoft also describes the "new Bing" as "like having a research assistant, personal planner, and creative partner at your side whenever you search the web".

One of Bing's example queries for its chat-based search, suggesting a dinner party menu for six vegetarian guests, illustrates how the chatbot can save time through a combination of idea generation and research: it presents options sourced from various websites, which the searcher can read through and click on the recipe that sounds most appealing. An article by Wired's Aarian Marshall on how the Bing chatbot performs for various types of search noted that it excelled at meal plans and organising a grocery list, although the bot was less effective at product searches, needing a few prompts to return the right type of headphones, and in one instance surfacing discontinued products.

Similarly, Search Engine Land's Nicole Farley used Bing's chatbot to research nearby Seattle coffee shops and was able to use the chat interface to quickly find out the opening hours for the second listed result - handy. However, when she asked the chatbot which coffee shop it recommended, the bot simply generated an entirely new list of five results - not quite what was asked for.

The potential for the Bing chatbot to act as an assistant to accomplish tasks is definitely there, and in my view this would be a more productive - and less risky - direction for Microsoft to push in given LLMs' tendency to get creative with facts. However, Bing would need to narrow its vision - and the scope of its chatbot - to focus on task-based queries, which would mean compromising its ability to present itself as a competitor to Google in search. It's less glamorous than "the world's AI-powered answer engine", but an answer engine is also only as good as its answers.

What about Google's ambition for Bard? In his presentation of the new chat technology, Senior Vice President Prabhakar Raghavan indicated that Google does want to focus on queries that are more nuanced (what Google calls NORA, or 'No One Right Answer') rather than purely fact-based questions, the latter being well-served by Featured Snippets and the Knowledge Graph. However, he also waxed lyrical about Google's capacity to "Deeply understand the world's information", as well as how Bard is "Combining the world's knowledge with the power of LaMDA".

It's in keeping with Google's brand to want to present Bard as all-knowing and all-capable, but the mistake in the Bard announcement has already punctured that illusion. Perhaps the experience will encourage Google to take a more realistic approach to Bard, at least in the short term.

The demonstrations from Bing and Google have undoubtedly left many SEOs and publishers wondering how much AI-generated chatbots are going to upend the status quo. Even when a list of search results is included alongside a chatbot answer, the chatbot answer is featured more prominently, and it's not altogether clear what mechanism causes certain websites to be surfaced in a chatbot response.

Do chatbots select from the top ranking websites? Is it about relevance? Are some sites easier to pull information from than others? And is that really a good thing, given that searchers reading a rehashed summary of a website's key points in a chatbot response may not bother to click through to the originating site? Can AI-generated chatbot answers be optimised for?

There are a lot of unanswered questions that could become more urgent - but only if AI-powered search catches on. Bing is only rolling out its new search experience in a limited fashion (again, cognisant of the risks involved) and Google, while having promised to release Bard to the public "in the coming weeks" has noticeably avoided committing to a release date. The reviews coming from those who have tried out Bing's new chat experience are mixed, and it's not altogether clear that LLMs offer a better search experience than can be obtained with a few human queries.

Satya Nadella, in his introduction to Microsoft's presentation of the AI-powered Bing, called it "high time" that search was innovated on. To be sure, there is a growing sense that the experience of search has got progressively 'worse' over the past several years - just Google (or Bing, if you fancy) "search has got worse" to see the evidence of this sentiment around the web. What's not certain is whether generative AI is the solution to this problem, or whether it will add to it.

- by foxnews

- descember 09, 2016

Ancient structure used for cult 'rituals' discovered by archaeologists

A Neolithic Timber Circle was discovered by archeologists in Denmark resembling the historical landmark Stonehenge in the U.K. It is open to be viewed by the public.

read more